If you’re looking for powerful insights, you want to ensure that your survey is getting the right responses. Of course, the first step to fielding an effective study is building a well-designed survey – but it doesn’t end there. What’s next? Data cleaning!

To get the most actionable and reliable responses from a survey, data cleaning is just as important as survey design. Data cleaning can help you determine the relevance, reliability, and accuracy of survey responses. It’s an imperative step to take before making insights-based decisions – which is why we recommend that all sample buyers conduct data cleaning as they receive survey responses.

What exactly is data cleaning?

Data cleaning is the process of reviewing the data you’ve collected, to ensure respondent attentiveness and response validity. In general, we give survey respondents the benefit of the doubt – since they’ve opted in to provide answers and receive an incentive for completing your survey. Data cleaning simply ensures the data collected is high quality and reliable so that it can be used to make important business decisions.

As we mentioned, our expects our customers to perform data checks and data cleaning on the survey responses they collect. Following data cleaning, buyers can reconcile any unusable completes, and they are not held financially accountable.

Benefits of data cleaning

If data cleaning is an extra step in the survey process, why go through with it? A consistent data cleaning process can offer many advantages to your research.

Enhance data quality

When you apply survey data cleaning best practices, you can significantly optimize data quality. Data issues like incomplete questions or contradictory responses can skew your results – and when you rely on this data for modeling and algorithms, getting the best data is crucial.

You can encourage high-quality data by creating strong questions, choosing the right participants, and using reliable survey platforms, but all of these steps happen before distributing your surveys. Data cleaning is a way to maintain quality after your surveys are launched , offering an additional quality control step before the results are implemented into insights.

Improve decision-making

High-quality data will lead to more effective decision-making. When your company relies on inaccurate data to determine its next steps, projects may not offer the return on investment (ROI) you’re looking for. Ensuring your data is meaningful can lead to well-informed decisions that help you grow your business.

For example, a survey about a new product may not be meaningful if it goes to the wrong demographic. If your product recognizes a need in the over-65 population, you won’t receive helpful responses from someone in their 20s.

Save money

You might use your survey data to mail out marketing materials or develop large-scale projects like new products. If you haven’t appropriately cleaned the data, you may end up printing marketing materials for people who aren’t interested. Or, you could develop a product people won’t buy. Cleaning your data can save you from significant investments that fall flat. In the end, you’ll save money.

Cleaner data can even lead to more revenue. When you use reliable data to make your decisions about marketing, products, or services, your audience is more likely to engage and buy from your company.

Increase productivity

When a portion of your data collection is unreliable, you end up spending time on data analysis for information that won’t help your company anyway. Data cleaning ensures you’re only dedicating time to valuable analysis, which leads to a more productive workflow.

Starting the data cleaning process during the surveying process can also help you streamline every downstream process. Every team handles the same group of accurate data and gathers valuable insight from the dataset.

When should you conduct data cleaning?

There are a few different phases of a study when you should conduct data cleaning. Our team has first-hand experience with data cleaning on projects that we program and host. We’ll share our process for cleaning data and making recommendations for removals, as we recommend the same steps for buyers programming their own surveys.

Step 1: Pre-launch data checks

In addition to quality assurance in survey programming, we run simulated data through the survey platform to perform data checks on all survey elements before launching the project. We check the data to ensure the following elements are working as intended:

- Survey logic (including screening conditions, skipping conditions, etc.)

- Quota qualifications, logic, and limits

- All data is being captured for required questions

- Required questions are not skipped

- Response labels and data map are matching

Step 2: Soft launch data checks

As a standard, we recommend that customers soft launch for about 10% of the total required sample or 100 completes, whichever is less. We use the data collected during the soft launch to perform the aforementioned data checks on live respondent data before proceeding to collect the entire sample.

Step 3: Full launch data cleaning

After the soft launch, we perform data cleaning twice over the course of survey fielding:

- 60% Data Collection

- 90% Data Collection

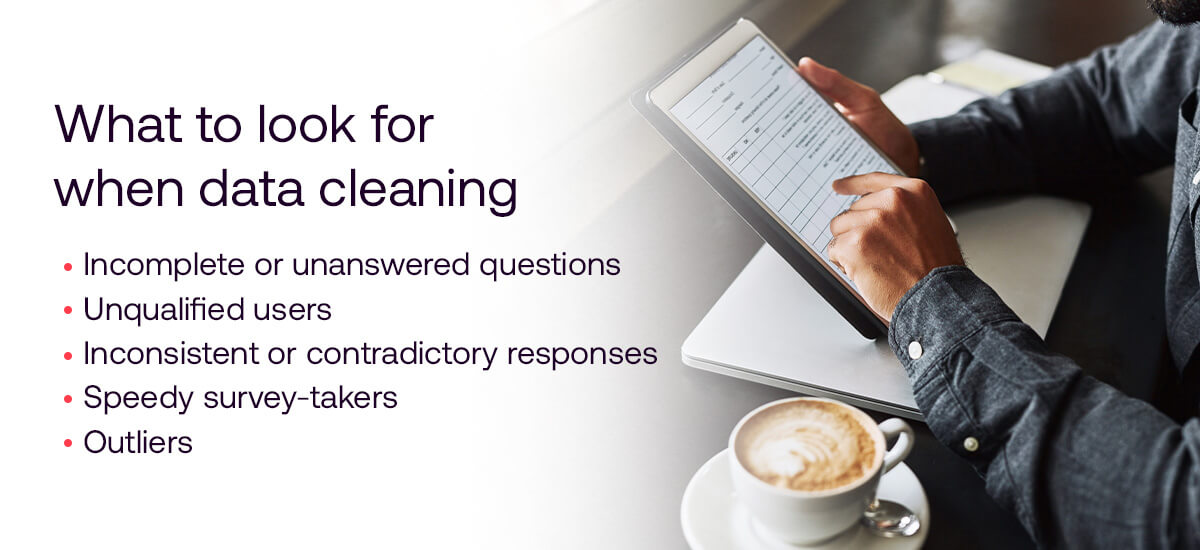

What to look for when data cleaning

Raw data can present many red flags. When starting the data cleaning process, it’s important to know these red flags and keep an eye out for them as survey results come in. Look out for the following factors.

Incomplete or unanswered questions

When respondents leave questions incomplete or unanswered, this can skew your overall results. There are several reasons why a respondent didn’t complete a survey. There may have been a flaw in the survey logic , or they may not have been engaged with the survey’s content.

If you see a high number of dropped surveys, it might be the survey design. Irrelevant questions or confusing wording can lead people to stop before they finish.

Unqualified survey respondents

Unqualified respondents are people that don’t fit the survey criteria. While it’s best practice to use screener questions to ensure that your survey is sent to the right people, unqualified respondents can still slip into your survey pool.

The best way to clean out unqualified respondents is to be mindful about the screening questions they answer prior to the survey. Screening questions are used to determine if someone is a match for a survey. For example, if you are conducting a survey to learn about people’s favorite brand of dog food, you would first ensure that your survey respondents are dog owners.

Response outliers

Some respondents may submit answers that fall far beyond the average participant’s response. For example, a survey question may ask about the number of hours spent watching television each week. A respondent might write 70 hours — whether or not it’s true, this number is well outside the average answer. Outliers like these can skew your results.

How do you conduct data cleaning?

Typically, we clean the data looking at the following survey elements and question types, though each may not be applicable to every survey:

Assess length of interview (LOI)

Looking at the survey based on the amount of time a respondent spent on a particular question, or the survey as a whole, is important. It can indicate areas where the respondent may have selected responses without thoroughly reading the question or carefully thinking about their response.

As a standard, we look at the median LOI as the expected time it takes to complete the survey. The industry definition of a “speeder” is any respondent who has completed a survey in less than ⅓ of the median LOI.

By default, we remove speeders from the survey results – and we add survey validation to automatically terminate respondents who complete within the designated speeder threshold time or less. We use the quality term redirect to communicate to our Marketplace partners why the respondent was terminated.

Please note that survey validation must be implemented after a soft launch (not before), as the only way to accurately gauge LOI is with surveys that are in-field. Setting a speeder term prior to launch might – and often does – term valid respondents.

Straightlining / Patterned Responses

Another area of data cleaning is to look at the responses on grid questions in the survey. If a respondent is answering the same answer option (“C”) over and over, their engagement in the survey may be suspect. As a default, we flag respondents who select the same response for at least five rows in a grid.

Respondents also create patterns on grid questions, though these are less obvious in the data, and thus harder to identify.

We think about data cleaning during the programming process, and we often program in validation to flag respondents who straightline specific questions in a survey.

Respondents who create patterns or pictures with their responses must be manually identified, though visualizing the data can help to identify these respondents.

Text Open End Questions

We recommends asking only one open ended question for every five minutes of respondent time to yield the best results. So, for a 15 minute survey, only three open ended questions are recommended.

Too many open ended questions can lead to respondent fatigue. Additionally, too many open ended questions can be a good indicator of the need to do qualitative research before creating a quantitative online survey. See our blog on quantitative and qualitative research to help determine if your study should be approached differently.

To clean open end responses, it is helpful to sort in alphabetical order to quickly and easily spot nonsensical text or characters such as “good” or “dfksjfdkj.”

Inconsistent or Unrealistic Responses

Inconsistent or unrealistic responses can take place on a number of different question types, including Numeric Open End and Single Select.

We advise researchers to think about unrealistic responses to questions when designing and programming the survey, as validation can be used to curb impractical responses. Here are some examples of spotting impractical responses.

How many times have you gone for a run in the past 12 months?

If a respondent answers 600 that answer is likely unrealistic because there are only 365 days in a year.

How many hours a week do you watch TV?

If a respondent answers 75 hours a week, that answer is likely unrealistic because there are 168 hours in a week and typically 40 are spent working, 56 spent sleeping, etc.

What is your birth year and age?

If a respondent is asked for their birth year as a single select question and the respondent selects “1988” and then at the end of the survey, we ask them their age, and they select “35-44” their data is inconsistent.

What is your relationship status? Number of children in your home? Total number of people in your household?

If a respondent indicates that they are married and have three children, but then say they have three people in their household, their data is inconsistent.

When should you reconcile survey responses?

Overall, it is up to the researcher or subject matter expert to determine which responses are unrealistic and which respondents to remove from the dataset. We recommend reconciling any respondent who fails these checks. Although, if your study has a very low incidence rate, it may be worthwhile to toss out respondents who fail two or more checks, but stringently review their data if they fail only one.

We allow respondents to be reconciled from the date of the complete to the last day of the following month. However, if you do plan to reconcile, we suggest doing so as quickly as possible, as reconciling poor quality completes is advantageous to both you and our supply ecosystem.

Now that you have a more thorough understanding of data cleaning, we hope you’ll implement everything you’ve learned! If you have questions about how to clean your data, please talk to your Cint representative or contact us for more information.

Learn more from Cint

At Cint, our research technology allows you to build effective surveys with reliable data. With features like our Quality Program, we can gauge factors like demographics and consistency. Learn more about our capabilities by contacting our team today.